Theism faces the problem of evil: why, in a universe created by a perfectly good being, do bad things happen? This has been variously answered by the creation by God of free will, a concept that turns out to be slippery to define, the existence a lesser deity that does evil, or just that God's ways are inscruitable.

Atheists face the opposite problem: how does good come to exist in a universe governed only by laws of physics? The theory of evolution by natural selection, in particular the theory of kin selection, predicts that people should care only for themselves and to a lesser extent their relatives.

Atheists have answered this problem in various ways. Under certain contrived circumstances, selfish people will act for the common good. This has been particularly studied in the case of the prisoner's dilemma game repeated a random number of times (i.e. unknown to the participants). Games such as this are the basis of our economic system (in theory at least). They have the useful property that we can model them with some accuracy, using game theory.

This gets us some way to an answer, but it does not explain why people not only sometimes act good, but seem to actually be good. One answer, which seems to be in fashion at the moment, is that humans with their novel capacity for reason can transcend the desires programmed by evolution. While this is not a theistic answer, it is an appeal to the supernatural, and I am personally unconvinced. It also has the problem that we can not model it with game theory. Even if people can transcend, the rational agents of game theory can not, and so whenever we try to construct mathematical models we fall back to the idea that people are selfish and that good is only a fortunate accident.

My own answer is that under the right circumstances it is rational to decide to

become a person who cares for other people's welfare.

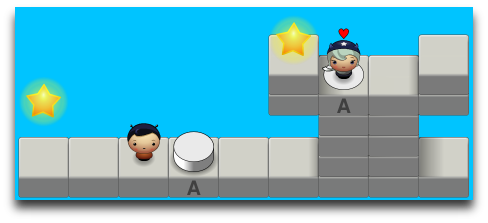

I present my answer in the form of a two player platform game,

in order to demonstrate that it is amenable to modeling.

Download

You will need Python 2 and PyGame:

TPOG has been tested under Linux, but should work on other platforms.

This is GPL software, please tinker with it. The game graphics are from Danc's awesome PlanetCute sprite set.

Playing the game

You control Player 1, the computer controls Player 2. The goal of the game is the same for both of you: to collect as many stars as possible. Each star is worth 10 points.

Other things being equal, Player 2 is lazy. She won't help you unless she has a reason to do so, even if this is at no cost to her, except by accident. She assumes the same of you.

You can move and jump by pressing the arrow keys.

You can fall in love with Player 2 by pressing the "L" key. This means you will act as though Player 2 collecting a star is worth 5 points to you. This simulates the same amount of love as kin selection predicts siblings should feel towards each other [1]. Points will be deducted for bad role-playing.

Similarly Player 2 may decide to fall in love with you.

Falling in love is visible to the other player. Otherwise there would be no reason to ever do this.

The game presents several different scenarios. Each scenario is independent.

At the start of each scenario, both players are purely selfish.

Your actions in a previous scenario have no effect on the next one.

Future

There are a few things I have not implemented in this game that would bring it closer to real life.

- Diminishing returns on stars. Presently players in love will not share stars. If they can, they will take them all for themselves for values of love less than 100%, or give them all to the other player for values of love greater than 100%. Diminishing returns would cause players to share stars, allowing more possibilities for cooperation.

- Chance events. If there are chance events, mutual love can serve as an insurance policy.

- Hidden or simulataneous moves. Simulataneous moves are somewhat more difficult to model than turn taking. Mutual love is a way to solve the notorious prisoner's dilemma game, which involves simultaneous moves.

- More than two players in the universe.

Finally, a couple of notes on how this effect, if it exists, should appear in the real world.

- There is not enough time in an individual's lifetime to learn how best to use this effect. Our genes would have evolved to optimize the overall strategy, and prompt us to implement it using their usual proddings of emotion. So I would expect our subjective experience of it would be a strong desire to enter into such arrangements, and pleasure upon doing so -- much as we feel hunger, and satisfaction from eating, or curiosity, and pleasure at finding things out.

- The evolution of the ability to alter one's motivations should be trivial, but the necessary corresponding ability to assess the motivations of others, which is what makes this first ability useful, is more difficult. However, I think it should be within the capabilities of at least most mammals. Suitably constructed behavioral experiments on animals should be able to detect these abilities.

This TED talk by Rebecca Saxe looks like a good start investigating the ability of humans to assess the motivations of others, by temporarily disabling this ability via Transcranial Magnetic Stimulation.

[1] Of course, according to my theory, siblings may rationally decide to care for

each other somewhat more than this.